VR with Unity Game Engine for Scientists

An intensive five‑day bootcamp to build and test VR experiments in Unity.

Research Scientist

As AI becomes the default interface to computing, the real question is how the brain adapts at the neural and behavioral levels. I study Human-Computer Interaction (HCI), Human-Robot Interaction (HRI), and extended reality (XR) to reveal how perception–action circuits encode, predict, and recalibrate during interaction with machines. To test these mechanisms, I build tracking hardware, haptic devices, and XR tools that capture fine-grained sensorimotor signals and close the loop in real time. The goal is brain-informed experiences that strengthen perception, movement, and collaboration.

My work spans human–computer interaction, sensorimotor neuroscience, and rehabilitation. I study how people plan and adapt hand actions to reveal the mechanisms of neuroplasticity. These findings set measurement priorities, shaping study designs and clarifying clinical success criteria.

Hardware translates those priorities into instruments. I build hand and finger‑tracking systems with haptics that capture motion and deliver tactile cues. They enable experiments linking touch, action, and learning with the precision studies demand.

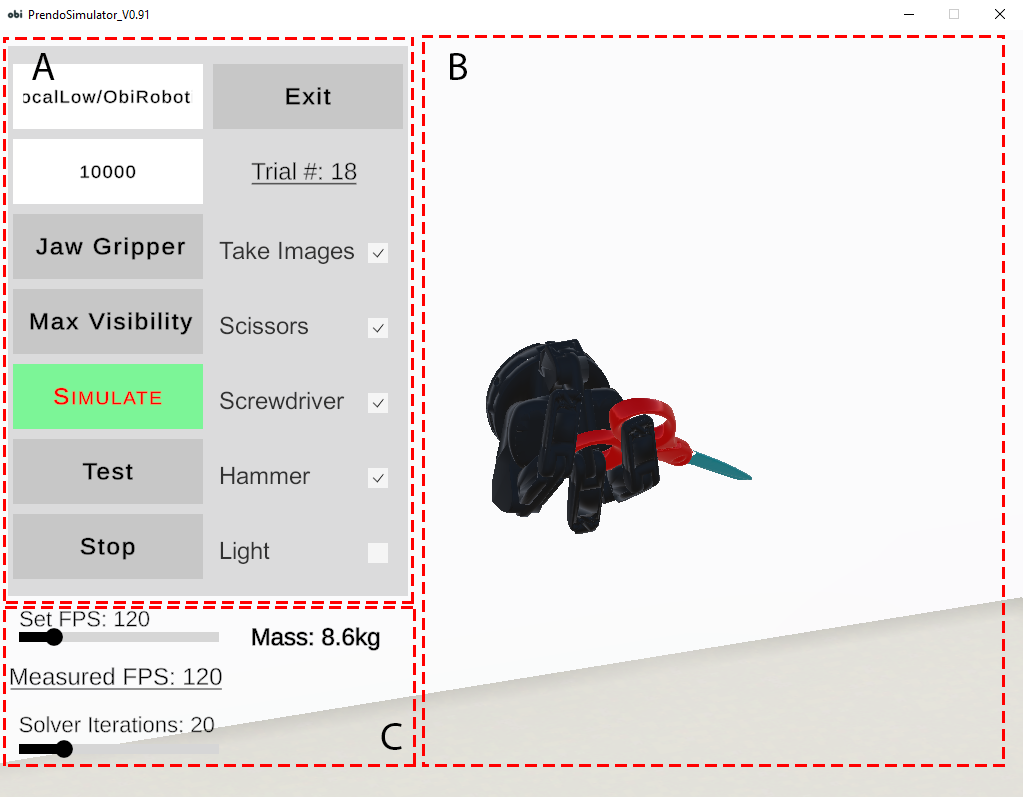

Software turns instrument data into understanding. I develop XR pipelines that link robots and game engines in real time, synchronise devices, and analyze movement. Outputs become interpretable measures of brain–behaviour change and drive engaging rehabilitation experiences.

An intensive five‑day bootcamp to build and test VR experiments in Unity.

A three‑day, hands‑on introduction to setting up, capturing, and analyzing motion‑capture data.

A one‑day crash course in practical CAD and rapid prototyping for research.

Hands‑on demos that bring XR/AI into classrooms and local heritage experiences.