Diar Abdlkarim

Research Scientist

As a research scientist and engineer, I believe we are in the midst of a fundamental shift in how we interact with technology. This transformation is largely driven by recent innovations in AI, which has rapidly become our primary interface with digital systems. This unifying development allows us all to more effectively benefit from the wonders of technology. My work exists at the intersection of scientific research in human-technology interaction (HTI), encompassing human-computer interaction (HCI), human-robot interaction (HRI), and immersive technologies (e.g., extended reality, XR).

I conduct scientific research on human sensory-motor action and perception, developing advanced hand and finger tracking hardware, haptic feedback devices, and immersive XR software tools to study and enhance human-technology interaction in both physical and virtual environments.

Development of research-grade hardware for real-time hand and finger tracking, including health and fitness devices that support clinical assessment of human touch and the development of brain-computer interfaces (BCI), incorporating electroencephalography (EEG), electromyography (EMG), electrical muscle stimulation (EMS), pupillometry, and eye tracking.

Development of various software research tools, such as phone applications (see Tactile on iOS App Store), XR applications (see SideQuest App Store), and various communication tools for real-time data streaming between robotic devices and game engines like Unity or Unreal Engine.

I designed and created this intensive 5-day course specifically to help new Master's and PhD students, as well as faculty members, who want to engage with virtual reality and learn how to develop VR-based scientific experiments. This is a hands-on course where I guide participants through the fundamentals of VR development in Unity, 3D environment design, interactive simulations, and data collection methods for research. By the end of the course, attendees become proficient in developing VR experiments for science and leave with the confidence to build their own research applications.

I created this 3-day intensive crash course to help scientists and students quickly gain hands-on experience with motion capture technologies. The course focuses on marker-based optical motion capture, while also introducing nearly all other types of motion capture systems. By the end of the course, participants are able to set up a mocap system, calibrate it, collect, process, and analyze motion data for their own research applications.

I created this 1-day intensive crash course for anyone who wants to quickly become proficient in 3D modeling for 3D printing. The course is especially geared towards scientists who regularly need to design and fabricate cases, contraptions, and experimental stimuli using 3D printing. I guide participants through the essentials of CAD software and additive manufacturing, focusing on practical skills for creating custom research equipment and prototypes.

I was awarded an EPSRC-funded PhD focused on upper-limb rehabilitation using augmented, immersive biofeedback in virtual reality. This research explored how sensory-motor perception can be harnessed to improve rehabilitation outcomes. I developed VR-based tasks to train arm movement and perception in patients with motor impairment, integrating real-time tactile and visual feedback. The work demonstrated that adaptive multi-sensory cues in VR can significantly enhance motor recovery and functional performance, laying a foundation for novel rehabilitation protocols.

As a named postdoctoral researcher on a £1.5M EPSRC-funded project (the Augmented Reality Music Ensemble), I took responsibility for system integration, user evaluation, and technology development. Working in a multidisciplinary team, I led the design of interactive virtual musicians and synchronized ensemble experiences. I developed software prototypes and conducted experimental studies on collaborative music practice in immersive AR/VR. This role contributed to the project’s goal of enhancing musical collaboration and rehearsal through cutting-edge immersive technology and data-driven evaluation.

I secured a small proof-of-concept award (approximately £5K) to develop a touch-testing device for assessing peripheral neuropathy in chemotherapy patients. In collaboration with clinical partners, I designed an adaptive tactile test system to quantify sensory deficits caused by cancer treatment. This involved creating a portable device that delivers controlled touch stimuli and records patient responses, enabling objective measurement of neuropathy severity. The pilot implementation demonstrated feasibility of the approach and laid the groundwork for larger studies on neurotoxicity assessment in cancer care. Learn more

I was awarded a £15K Google Research gift (through the TextInputVault funding project) to explore innovative human–computer interaction paradigms for ten-finger typing in immersive VR/AR. In this work, I investigated how users can achieve touch-typing speeds using freehand gestures and novel controllers in virtual environments. I led experiments and prototype development to optimize typing performance, collaborating closely with industry researchers. The project aimed to expand understanding of efficient text entry in XR and identify techniques for enabling natural multi-finger typing in head-mounted displays.

I co-authored a comprehensive review of text input methods in virtual and augmented reality (the XR TEXT Trove) which was honored with the ACM Best Paper Award at CHI 2025. This work cataloged over 170 XR text-entry techniques and analyzed their design features. The TEXT Trove project was featured in a RoadToVR article describing our research initiative. Receiving the Best Paper Award highlights the impact of this review and our contribution to improving VR/AR text input usability through systematic analysis.

I was selected for the Innovate UK ICURe Explore programme, receiving £30K for a three-month full-time market exploration. During this period I refined the business model and market strategy for our augmented reality musician system (ARME project) and engaged with industry stakeholders. This programme provided structured support to assess the commercial potential of the AR ensemble technology. The ICURe funding accelerated our path toward translating research into practice by validating real-world applications for our AR musician platform.

Following the Explore phase, we have successfully progressed to the ICURe Exploit program, positioning us to form a startup around the AR musician technology. This next stage carries potential funding up to ~£300K for intensive company formation and growth support. Building on the market insights from Explore, we are now developing a business plan and seeking investment to commercialize the AR musician ensemble system. Advancing through ICURe underscores confidence in the technology’s market viability and advances our goal of a spin-out company in VR/AR music tech.

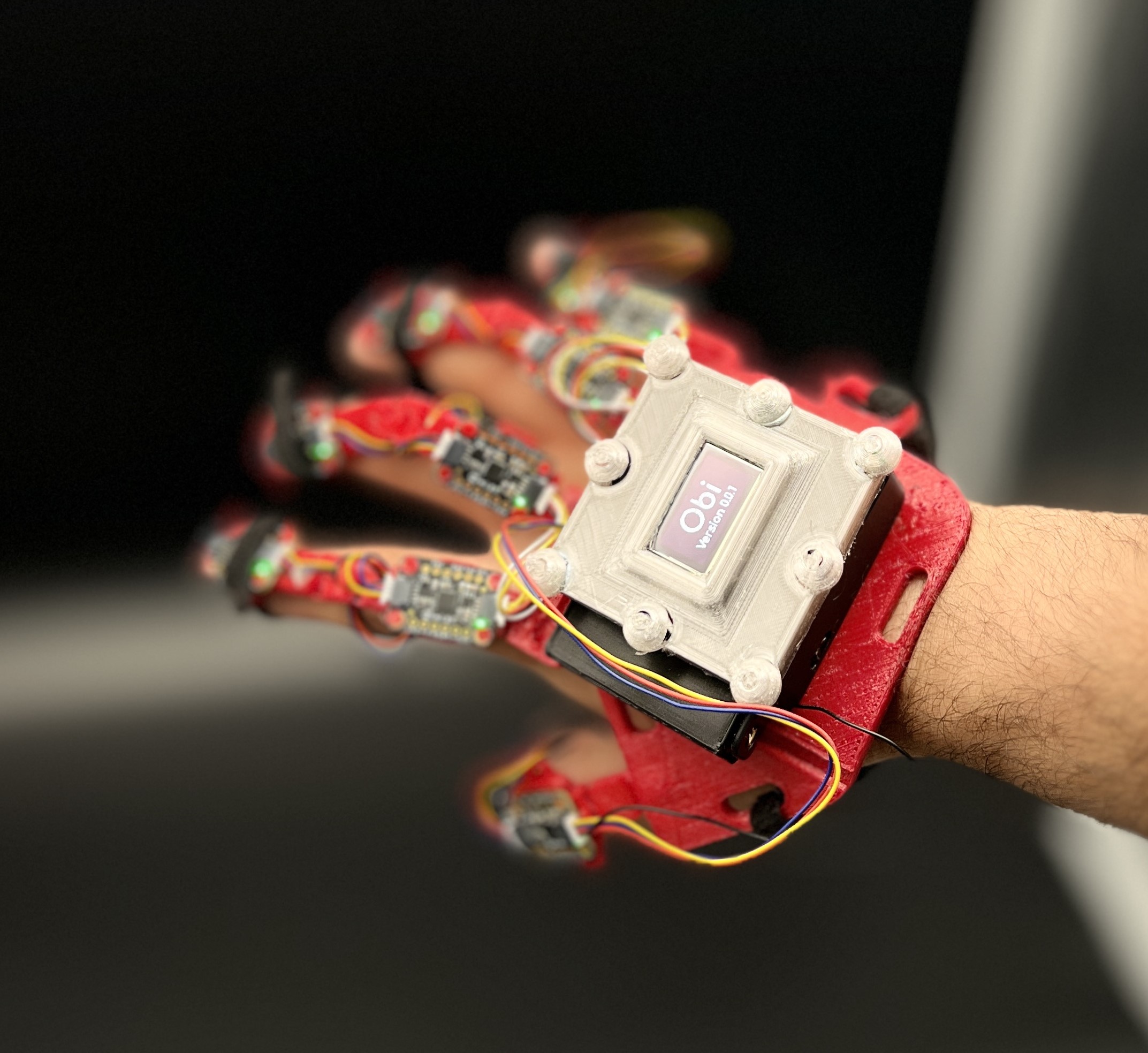

Modular Hand-Tracking Glove ("Obi" Project). I built a custom glove that captures every subtle movement of my hand and fingers. It began as a tangle of wires and tiny IMU sensors taped to a glove – a rough prototype that let a digital hand on my screen mimic my own. That early experiment evolved into a refined, modular glove system I call "Obi Reach." Each finger’s motion is tracked with up to 3 degrees of freedom, sampling at 240 Hz for smooth, real-time response. The glove even incorporates an optical tracking module for precise position in space, and I’ve embedded small haptic actuators to provide tactile feedback to the wearer. Designing this from scratch – including custom electronics – was challenging, but incredibly rewarding. Now I can use this glove for everything from immersive VR interactions to controlling robotic hands, and it’s easily customizable for new experiments.

This fingertip-mounted haptic device enables users to feel the sensation of pressing virtual buttons and encountering bumps in digital environments, creating a tangible sensory link to virtual worlds. The device features a compact housing and an indentor mechanism that converts rotary motion from a small geared motor into precise linear indentation of the fingertip. Real-time force feedback allows for immersive, engaging experiences by rendering tactile cues directly to the user. Beyond enhancing VR immersion, this tool is valuable for human-computer interaction research and assessing sensory-motor performance, such as evaluating patients with peripheral neuropathy and related conditions.

This fingertip-mounted haptic device delivers both low and high frequency vibrotactile signals to the user's fingertips, simulating the sensation of texture during exploratory touch and virtual object interaction. By providing nuanced vibration patterns, the device enhances the perception of surface qualities and material properties in digital environments. These tactile cues complement visual and auditory feedback, enriching multi-sensory experiences during active engagement. Clinically, the device is valuable for sensory assessment, enabling the evaluation of tactile function in patients with conditions such as Carpal Tunnel Syndrome (CTS), vibration-induced neuropathies, and other disorders affecting hand and finger sensation.

This desktop, grounded device is designed specifically for clinical research and assessment of sensory-motor performance. Unlike fingertip-mounted haptic devices, this system provides precise vibrotactile stimuli to the user's fingertip while simultaneously measuring the force applied by the user. This dual capability enables comprehensive evaluation of tactile sensitivity and motor control, which is essential for diagnosing and monitoring conditions such as Carpal Tunnel Syndrome (CTS), vibration-induced neuropathies, and other disorders affecting hand function. The device is not intended for virtual or augmented reality applications, but rather for use in clinical and research settings. It is accompanied by a dedicated phone application that wirelessly interfaces with the device, allowing clinicians and researchers to run a variety of preset assessments and collect data either on-site or remotely, streamlining the process of sensory-motor evaluation.

As a fun experiment in combining simulation and tactile feedback, I created a virtual reality pool game in my home setup. I can step into a VR pool hall, grab a virtual cue, and line up shots just like real life. The special part is the custom haptic twist I added: I connected my wrist squeeze-band to the game. When the cue strikes a ball or the ball sinks into a pocket, the band gives my wrist a quick squeeze or pulse. The first time I felt that thud “for real,”. This project blends my love of games with my obsession for realism in VR, and it taught me a lot about syncing physical feedback with virtual physics.

To bring a sense of touch into my virtual experiences, I developed a haptic wristband that provides squeeze to my wrist. Essentially, it’s a band that can squeeze my wrist in sync with events in a simulation. I built this to explore whether pressure cues could make virtual actions feel more real – like the jolt of a virtual ball hitting a pool cue or the weight of a digital object. The design went through many iterations to get the squeeze just right: firm enough to be felt, but comfortable and safe. When something happens in VR, a small motor tightens the band for a split second, translating visual impact into a tactile one. It’s a quirky gadget, but strapping it on genuinely adds immersion – my brain starts interpreting those squeezes as if I’m touching the virtual world.

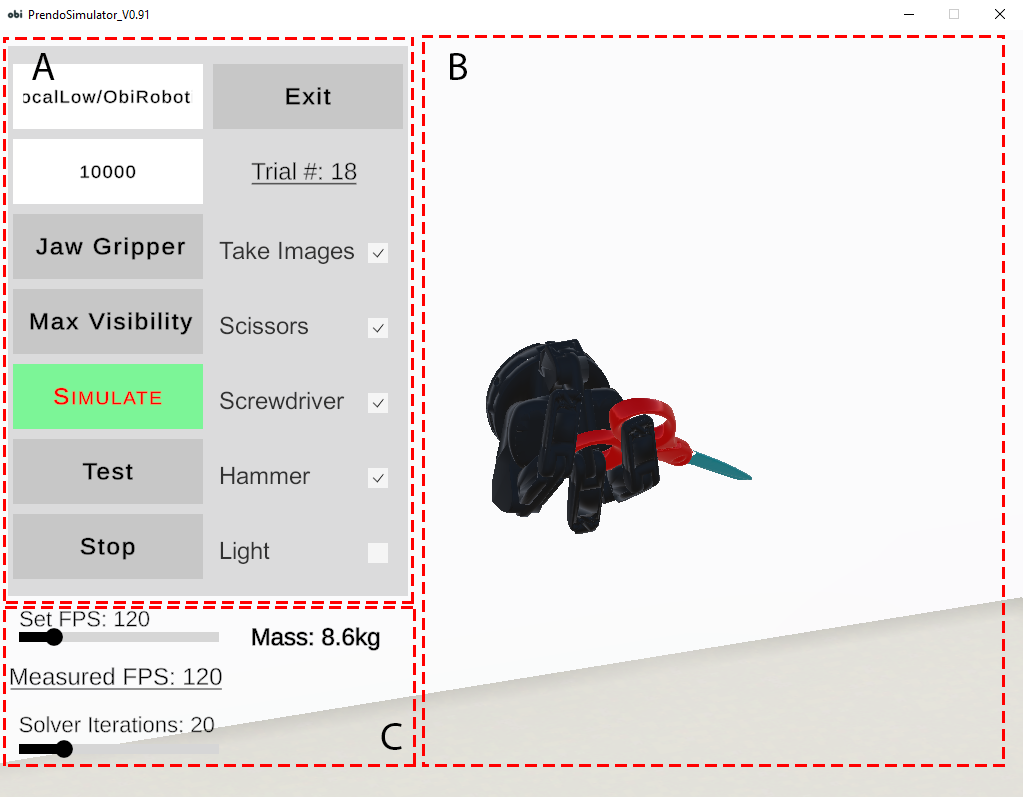

This project is about creating realistic hand-object interactions without coding, but rather through the use of the physics engine provided by the game engine (Unity in this case). Users hands can naturally interact with the virtual world, hold objects, press buttons and manipulate the enviornment in a physically realistic and plausible manner.

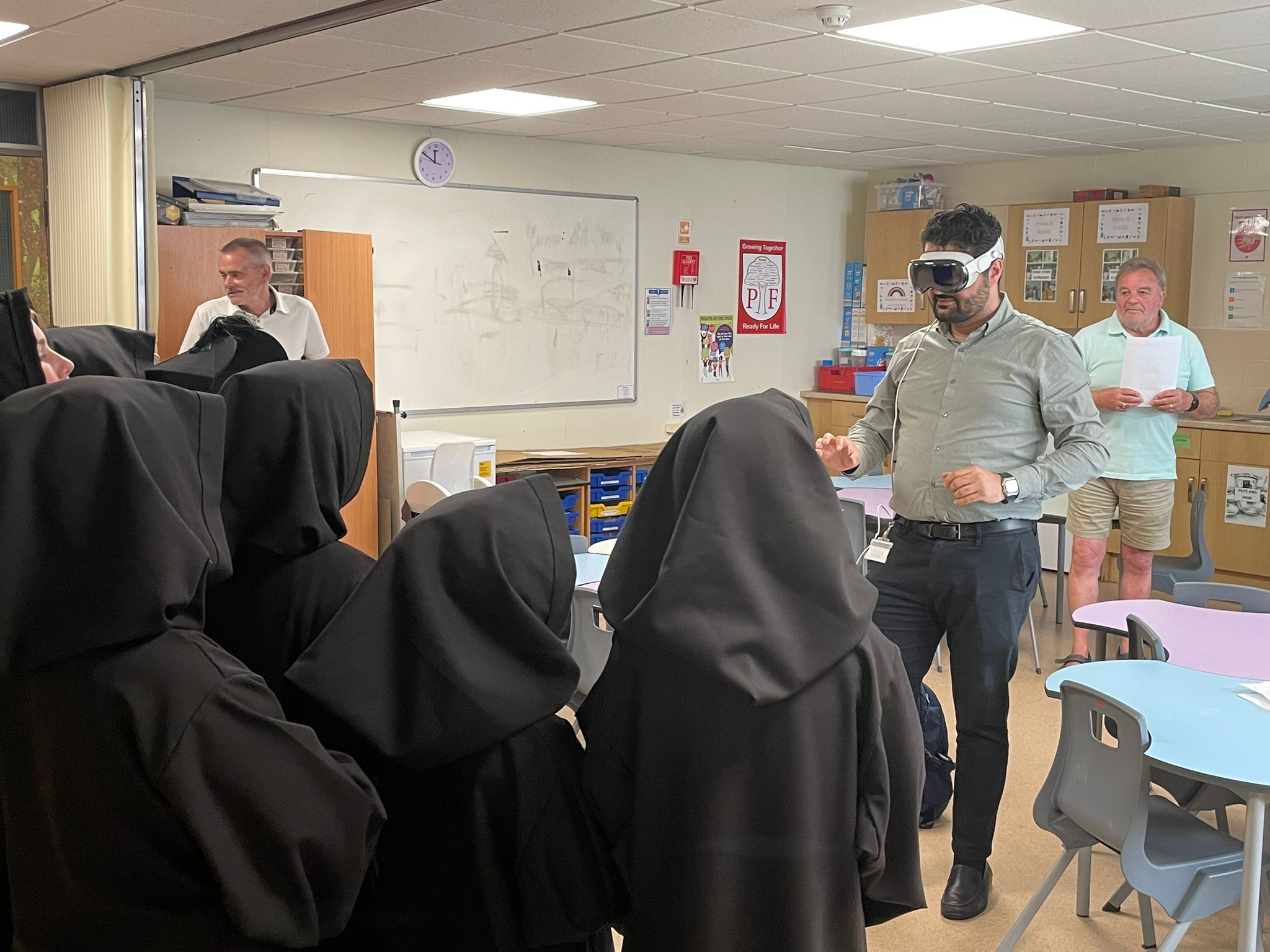

I am actively engaged with the Warwickshire School District to introduce immersive and AI technologies to primary school students. This initiative aims to stimulate early interest and learning about cutting-edge technologies by bringing VR headsets, AI demonstrations, and interactive experiences directly into classrooms. Through hands-on workshops and guided demonstrations, students get to experience virtual reality environments, interact with AI systems, and learn about the future of technology in an accessible, age-appropriate manner. This early exposure helps cultivate curiosity and understanding of emerging technologies, potentially inspiring the next generation of scientists, engineers, and innovators.

I am collaborating with the Kenilworth Town Council to create immersive digital representations of the town's rich heritage through a new mobile phone application with augmented reality capabilities. This project involves developing an AR app that allows visitors and locals to explore historical sites, buildings, and landmarks through their smartphones. Users can point their cameras at specific locations to see historical overlays, interactive information panels, and virtual reconstructions of how the town looked in different time periods. This innovative approach to heritage preservation and tourism combines modern technology with historical storytelling, making the town's fascinating history more accessible and engaging for everyone.

Biotti, F., Sidnick, L., Hatton, A. L., Abdlkarim, D., Wing, A., Treasure, J., Happé, F., Brewer, R. (2025).

Behavior Research Methods, 57(5), 133. Springer US New York.

Bhatia, A., Mughrabi, M. H., Abdlkarim, D., Di Luca, M., Gonzalez-Franco, M., Ahuja, K., Seifi, H. (2025).

In Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems (pp. 1–18).

Gonzalez-Franco, M., Abdlkarim, D., Bhatia, A., Macgregor, S., Fotso-Puepi, J. A., Gonzalez, E. J., Seifi, H., Di Luca, M., Ahuja, K. (2024).

In 2024 IEEE International Symposium on Emerging Metaverse (ISEMV) (pp. 13–16). IEEE.

Watkins, F., Abdlkarim, D., Winter, B., Thompson, R. L. (2024).

Scientific Reports, 14(1), 1043. Nature Publishing Group UK London.

Abdlkarim, D., Di Luca, M., Aves, P., Maaroufi, M., Yeo, S.-H., Miall, R. C., Holland, P., Galea, J. M. (2024).

Behavior Research Methods, 56(2), 1052–1063. Springer US New York.

Li, M. S., Tomczak, M., Elliot, M., Bradbury, A., Goodman, T., Abdulkarim, D., Di Luca, M., Hockman, J., Wing, A. (2023).

Tomczak, M., Li, M. S., Bradbury, A., Elliott, M., Stables, R., Witek, M., Goodman, T., Abdlkarim, D., Di Luca, M., Wing, A., et al. (2022).

arXiv preprint arXiv:2211.08848.

Ortenzi, V., Filipovica, M., Abdlkarim, D., Pardi, T., Takahashi, C., Wing, A. M., Di Luca, M., Kuchenbecker, K. J. (2022).

In 2022 17th ACM/IEEE International Conference on Human-Robot Interaction (HRI) (pp. 256–264). IEEE.

Abdlkarim, D., Ortenzi, V., Pardi, T., Filipovica, M., Wing, A., Kuchenbecker, K. J., Di Luca, M. (2021).

In 18th International Conference on Informatics in Control, Automation and Robotics. SciTePress Digital Library.

Schuster, B. A., Sowden, S. L., Abdulkarim, D., Wing, A. M., Cook, J. L. (2019).

Frontiers in Human Neuroscience, 13.

Watkins, F., Abdlkarim, D., Thompson, R. L. (2018).

In 3rd International Conference on Sign Language Acquisition (Istanbul: Koç Üniversitesi).

I am a futurist with an optimistic belief in technology’s power to improve our lives. My passion lies in exploring how the human nervous system can interface with machines, especially as artificial intelligence transforms our world. From building custom haptic devices and VR gloves to developing immersive simulations, I strive to create technology that brings us closer to our digital experiences. My research is driven by curiosity about how we sense, move, and interact, and how new tools can expand what it means to be human in the age of AI.